Our clients are among the most innovative startups in the Web 2.0 and the Consumer Internet space. We see a constant need to aggregate data from multiple publicly available sources on the web. For instance, if you are a shopping search engine or a travel search site, you have to crawl retail merchants, portals and e-commerce sites to gather product descriptions, inventory, and pricing and availability data. Some of these sites have APIs that you should leverage but at times the site you are interested in may not. In addition, the APIs may not expose the information that you need.

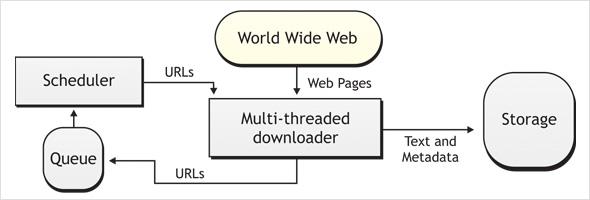

Crawlers download the visited pages and create the data index in an automated manner by following a specified link pattern. The index is then parsed and analyzed using matching algorithms to extract relevant information. Thus the raw text from the web pages is transformed into structured data.

Typical Crawler Architecture

Crawling in an intelligent and effective manner is a challenging task because of the large volume of pages, the fast rate of change and due to dynamic page generation. These characteristics of the web combine to produce a wide variety of possible crawlable URLs. Due to constant updates on most popular websites, it is very likely that new pages have been added to the site, or downloaded pages have already been updated or even deleted. For instance, a product page that was indexed by a crawler may be sold out after a few hours or the pricing could have changed. Engineers creating and configuring crawlers thus spend time fine-tuning criteria to select the pages that need to be downloaded and how often those pages are checked for updates.

Given these inherent challenges with mining data from the web, to ensure a high level of data accuracy and relevance, you have to supplement the automated effort with manual data cleansing and data reconciliation. We work with several clients to review huge amounts of sample data sets to identify the following common issues:

- Data being mapped incorrectly to your schema because of inconsistencies in the source web pages that cause errors in the parsers

- Relevant pages in the source website that were skipped by the crawler due to either problem with opening or following the link

- Certain meta-data that has changed in a large percentage of pages

We work with engineering teams to improve the effectiveness of the crawlers by implementing algorithms to handle exception cases in the crawlers. Thus over time with improved machine learning, the quality of data that is indexed and parsed gets better.